A Factory pattern can be implemented in several ways depending on which way a developer feels comfortable with and addresses future extensions. Factory class encapsulates object creation logic which other classes can use to get object instances without knowing the details of how the object was created. It gives a central location to developers for creational logic rather than scattering that logic everywhere in the application code.

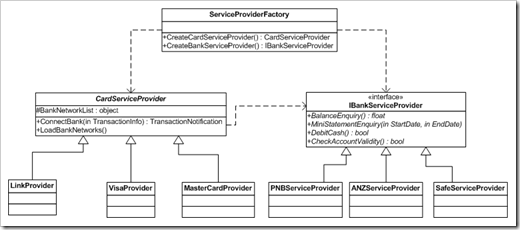

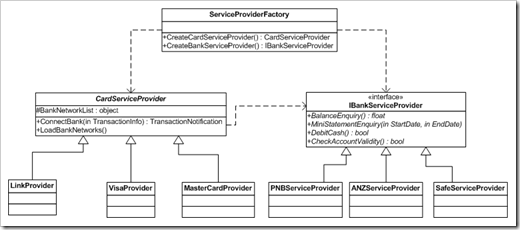

I’m going to show an example here of how I used Reflection and Custom Configuration Section in .NET Framework to create my factory class. The example is based on simple ATM system which communicates with Card Service Providers (CSP) to carry out transaction successfully. CSP then communicates with its underlying bank network (Bank Service Providers) for transaction completion. Because there can be several card service providers and banking network I’ve managed to extract a common interface for both of them. Here is how the UML looks like for them:

In the above class diagram CardServiceProvider is an abstract class which has virtual ConnectBank() and abstract LoadBankNetworks(). Every deriving CSP is responsible to load its own banking network. The ATM client is not aware of any of the CSP or banking network concrete implementations. ATM client only knows static ServiceProviderFactory class and CSP and banking network interfaces. Following code shows code for both CSP and banking network.

public abstract class CardServiceProvider

{

///

/// This dictionary collection holds bank service provider for a given

/// first few digits card number format depending upon card service provider

/// e.g card number starting with 6465 belongs to ANZ bank within Visa network

///

protected Dictionary _bankNetworkList;

public CardServiceProvider()

{

_bankNetworkList = new Dictionary();

}

///

/// Connects to bank network for transaction completion

///

public virtual TransactionNotification ConnectBank(TransactionInfo transactionInfo)

{

//Only load list if it is not loaded yet

if (_bankNetworkList.Count == 0)

{ LoadBankNetworks(); }

TransactionNotification transactionNotification = new TransactionNotification();

int identifier = GetBankNetworkIdentifier(transactionInfo.CardNumber);

IBankServiceProvider bankServicerProvider = _bankNetworkList[identifier];

bankServicerProvider.TransactionInfo = transactionInfo;

if (bankServicerProvider.CheckAccountValidity())

{

switch (transactionInfo.TransactionType)

{

case TransactionTypeCode.BalanceEnquiry:

transactionNotification.Balance = bankServicerProvider.BalanceEnquiry();

transactionNotification.CSPNotificationType = NotificationTypeCode.BankTransactionSuccess;

break;

case TransactionTypeCode.WithDrawCash:

transactionNotification.IssueCash = bankServicerProvider.DebitCash(transactionInfo.WithDrawCashAmount);

transactionNotification.CSPNotificationType = transactionNotification.IssueCash ? NotificationTypeCode.BankTransactionSuccess : NotificationTypeCode.NotEnoughBalance;

break;

case TransactionTypeCode.MiniStatementEnquiry:

transactionNotification.MiniStatement = bankServicerProvider.MiniStatementEnquiry(transactionInfo.StatementStartDate,

transactionInfo.StatementEndDate);

transactionNotification.CSPNotificationType = NotificationTypeCode.BankTransactionSuccess;

break;

default:

transactionNotification.CSPNotificationType = NotificationTypeCode.AccountBlocked;

break;

}

}

else

{ transactionNotification.CSPNotificationType = NotificationTypeCode.AccountBlocked; }

return transactionNotification;

}

///

/// Every card service provider maintains their own list of banks

///

public abstract void LoadBankNetworks();

///

/// By default first 4 digits identifies bank network

///

/// card number

/// 4 digits identifier

protected virtual int GetBankNetworkIdentifier(string cardInfo)

{

return Convert.ToInt32(cardInfo.Substring(0, 4));

}

}

public interface IBankServiceProvider

{

/// <summary>

/// Gets balance from the account

/// </summary>

float BalanceEnquiry();

/// <summary>

/// Gets mini transaction statement

/// </summary>

/// <param name="StartDate">Transaction start date</param>

/// <param name="EndDate">Transaction end date</param>

DataTable MiniStatementEnquiry(DateTime StartDate, DateTime EndDate);

/// <summary>

/// If transaction is successfull we also want to log the transaction

/// </summary>

/// <param name="Amount">Withdraw cash requested</param>

/// <returns>True if transaction was successful otherwise false</returns>

bool DebitCash(float Amount);

/// <summary>

/// Checks if the account is valid

/// </summary>

bool CheckAccountValidity();

TransactionInfo TransactionInfo { get; set; }

}

In order to instantiate classes implementing above abstract class and interface I’ve create custom configuration section in the configuration file. This way allows developers to add more concrete CSP and bank networks in future making the application free for extensions. My configuration section looks like below.

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<configSections>

<section name="CardServices" type="ATM.Transaction.Lib.CardServiceProviderSection, ATM.Transaction.Lib "/>

</configSections>

<CardServices>

<CardProvider>

<add keyvalue="Visa" type="ATM.Transaction.Lib.VisaProvider">

<Codes>

<add keyvalue="6465" banktype="ATM.Transaction.Lib.ANZServiceProvider" />

<add keyvalue="2323" banktype="ATM.Transaction.Lib.PNBServiceProvider"/>

</Codes>

</add>

<add keyvalue="Link" type="ATM.Transaction.Lib.LinkProvider">

<Codes>

<add keyvalue="5554" banktype="ATM.Transaction.Lib.PNBServiceProvider" />

<add keyvalue="6464" />

</Codes>

</add>

<add keyvalue="MasterCard" type="ATM.Transaction.Lib.MasterCardProvider">

<Codes>

<add keyvalue="7271" />

<add keyvalue="9595" />

</Codes>

</add>

</CardProvider>

</CardServices>

</configuration>

A given CSP can connect to several banking network. Few bank network types I have intentionally left out to just indicate that they can be added latter on. The CardServiceProviderSection class reads the configuration section and dynamically loads instances of concrete classes using reflection. This class is derived from System.Configuration.ConfigurationSection class. In the above configuration section <CardProvider> has collection which is represented by ProviderElement Collection and within ProviderElement we have more then one occurrences of CodeElement Collection. To represent these collections I’ve created generic CustomElementCollection<T> class which extends System.Configuration.ConfigurationElementCollection class. The real trick to use reflection and dynamically create instances of CSP and bank network happens inside ProviderElement class. See the code below for this class

internal class CardServiceProviderSection : ConfigurationSection

{

[ConfigurationProperty("CardProvider")]

public CustomElementCollection<ProviderElement> CardServiceProviders

{

get

{

return this["CardProvider"] as CustomElementCollection<ProviderElement>;

}

}

}

internal class ProviderElement : CustomElementBase

{

[TypeConverter(typeof(ServiceProviderTypeConverter))]

[ConfigurationProperty("type", IsRequired = true)]

public CardServiceProvider ProviderInstance

{

get

{

return this["type"] as CardServiceProvider;

}

}

[ConfigurationProperty("Codes")]

public CustomElementCollection<CodeElement> Codes

{

get

{

return this["Codes"] as CustomElementCollection<CodeElement>;

}

}

}

internal class CodeElement : CustomElementBase

{

[TypeConverter(typeof(ServiceProviderTypeConverter))]

[ConfigurationProperty("banktype", IsRequired = false)]

public IBankServiceProvider BankProviderInstance

{

get

{

return this["banktype"] as IBankServiceProvider;

}

}

}

internal sealed class ServiceProviderTypeConverter : ConfigurationConverterBase

{

public override bool CanConvertFrom(ITypeDescriptorContext ctx, Type type)

{

return (type == typeof(string));

}

public override object ConvertFrom(ITypeDescriptorContext context, System.Globalization.CultureInfo culture, object value)

{

Type t = Type.GetType(value.ToString());

return Activator.CreateInstance(t);

}

}

ProviderInstance property of ProviderElement class is decorated with TypeConverterAttribute which specifies ServiceProviderTypeConverter. This class overrides ConvertFrom() from ConfigurationConvertBase which uses reflection to return instance of type contained in value parameter. Codes property uses similar technique to create instances of bank networks.

To represent ProviderElement collection I have used CustomElementCollection<ProviderElement>. The code is shown below.

internal class CustomElementCollection<T> : ConfigurationElementCollection where T : CustomElementBase, new()

{

public T this[int index]

{

get

{

return base.BaseGet(index) as T;

}

set

{

if (base.BaseGet(index) != null)

{

base.BaseRemoveAt(index);

}

this.BaseAdd(index, value);

}

}

protected override ConfigurationElement CreateNewElement()

{

return new T();

}

protected override object GetElementKey(ConfigurationElement element)

{

return ((T)element).KeyValue;

}

}

internal class CustomElementBase : ConfigurationElement

{

[ConfigurationProperty("keyvalue", IsRequired = true)]

public string KeyValue

{

get

{

return this["keyvalue"] as string;

}

}

}

Now the main thing left out is how to get instances of concrete classes specified in the configuration. Well as you may have guessed it would be static ServiceProviderFactory class which is used by ATM client to get instances.

public static class ServiceProviderFactory

{

private static CardServiceProviderSection csps;

static ServiceProviderFactory()

{

csps = ConfigurationManager.GetSection("CardServices") as CardServiceProviderSection;

}

public static CardServiceProvider CreateCardServiceProvider(string cardNumber)

{

CardServiceProvider csp = null;

foreach (ProviderElement pe in csps.CardServiceProviders)

{

if (pe.Codes.OfType<CustomElementBase>().Select(elm => elm.KeyValue).Contains(cardNumber.Substring(0, 4)))

{

csp = pe.ProviderInstance;

break;

}

}

return csp;

}

public static Dictionary<int, IBankServiceProvider> CreateBankServiceProvider(string keyValue)

{

var providers = from pe in csps.CardServiceProviders.OfType<ProviderElement>()

from c in pe.Codes.OfType<CodeElement>()

where pe.KeyValue == keyValue

select new { KeyValue = Convert.ToInt32(c.KeyValue), Provider = c.BankProviderInstance };

Dictionary<int, IBankServiceProvider> bankProviders = new Dictionary<int, IBankServiceProvider>();

foreach (var p in providers)

{ bankProviders.Add(p.KeyValue, p.Provider); }

return bankProviders;

}

}

Inside the static constructor I get all custom configuration details. The static constructor is called first time when ServiceProviderFactory is used. Other static methods within this class have just got mere responsibilities to query correct instance of CSP and bank network when called.

The ATM client would can use following code to get instance of CardServiceProvider class

CardServiceProvider csp = ServiceProviderFactory.CreateCardServiceProvider(cardNumber);

More providers can be added latter on in the configuration, but the object creational technique and ATM client would not need to worry about this.

_1270.jpg)